Why in the news?

NITI Aayog released 'White Paper: Responsible AI for All (RAI) on Facial Recognition Technology (FRT)'

More about the news

- The paper examines FRT as the first use case under NITI Aayog's RAI principles and aims to establish a framework for responsible and safe development and deployment of FRT within India.

About Facial Recognition Technology (FRT)

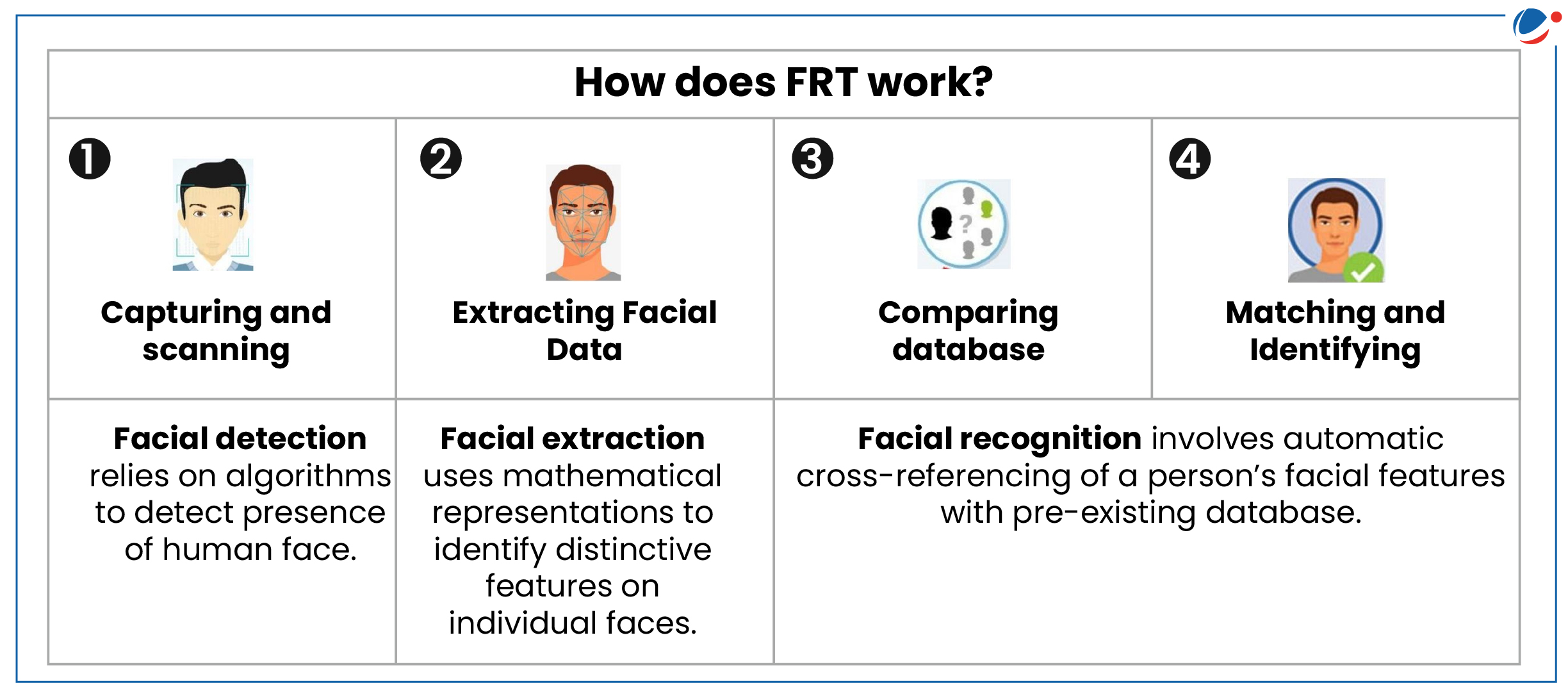

- It is an Artificial intelligence (AI) system which allows identification or verification of a person based on certain images or video data using complex algorithms.

- FRT can be used for two purposes:

- 1: 1 verification of identity: Facial map is obtained for the purpose of matching it against the person's photograph on a database. E.g. 1.1 is used to unlock phones.

- 1: n identification of identity: Verification against the entire database to identify the person in the photograph or video. E.g. 1: n is used for mass monitoring and surveillance.

Applications and use-cases of FRT

- Security related Uses

- Law and order enforcement:

- Identification of Persons of Interest, including suspected criminals. E.g., Uttar Pradesh's 'Trinetra' for real-time identification of criminals

- Identification of Missing Persons. E.g., Telangana's 'Darpan' for matching photos and identifying missing children.

- Monitoring and surveillance. E.g., China's Skynet Project.

- Immigration and border management. E.g., Canada's 'Faces on the Move' enables Border Protection by prevention of people entering the country using fake identification.

- Crowd Control. E.g., Pan Tilt and Zoom Surveillance Cameras used in Prayagraj, Uttar Pradesh Law for maintenance of large crowds during Kumbh Mela, 2021.

- Law and order enforcement:

- Non-Security related Uses

- Verification and authentication of individual identity for access to products, services, and public benefits using biometrics. E.g., Using Aadhar Card for Authentication based on Facial Recognition.

- Ease of access to services. E.g., contactless onboarding at airports through Digi Yatra.

- Ease in usability such as unique IDs in educational institutions, etc. E.g., Central Board for Secondary Education's 'Face Matching Technology Educational' for authentication to access academic documents.

What are the risks associated with FRT systems?

- Inaccuracies: FRT systems may lead to misidentification due to inaccuracies resulting from-

- Automation bias and underrepresentation in databases: It may lead to disparities in AI systems based on skin tone, race, gender etc.

- Lack of accountability

- Technical factors: This includes intrinsic factors like facial expression, aging, plastic surgery etc. and extrinsic factors like illumination, pose variation, occlusion, or quality of image.

- Glitches or perturbations: FRT systems can be sabotaged by minor tweaks that are insignificant to humans but can render the technology ineffective.

- Lack of training of human operators: FRT systems require a human operator to either verify or act on outputs provided by FRT systems.

- Concerns regarding Accountability, legal liability and grievance redressal: Due to complexity in computational algorithms and protections surrounding Trade secret and intellectual property of FRT systems.

- Rights-based issues: Supreme Court in Justice K Puttaswamy v. Union of India (2017) has recognised the right to informational autonomy as a facet of the right to privacy within Article 21 of the Constitution. FRT systems may violate these rights due to-

- Purpose creep: Use of personal data like Biometric facial images in manners contrary to or in addition to its stated purpose is against the concept of informational autonomy.

- Data leaks: Weak institutional data security practices may lead to data breaches and unauthorised access of personal data.

- Lack of meaningful consent: Making facial recognition mandatory for access to public services, public benefits or rights without adequate alternative means to those services and rights undermines meaningful consent.

- Private Security Use: Incentivization of private security firms to flag suspicious individuals using FRT can lead to excessive and potentially unjustified surveillance activities.

Way Forward: Recommendations of NITI Aayog for responsible use of FRT

- Principle of Privacy and Security: Establish data protection regime fulfilling a three-pronged test of legality, reasonability and proportionality set by the Supreme Court in the Puttaswamy judgement.

- E.g., Digital Personal Data Protection (DPDP) Act 2023 aims to regulate the processing of digital personal data while ensuring individuals' right to protect their data and the need to process it for lawful purposes

- Holistic governance framework: Set out extent of liability arising from any harms/damages caused by the use of an FRT system.

- Adopting Privacy by design (PBD) principles: Such as collection of user's explicit consent.

- Principles of accountability: Address issues pertaining to transparency, algorithmic accountability and AI biases to secure public trust.

- Also, establish an accessible grievance redressal system for any FRT related issues.

- Ensuring Safety and Reliability: Publishing standards of FRT related to explainability, bias and errors.

- Principle of protection and reinforcement of positive human values: Constitute ethical committee to assess ethical implications and oversee mitigation measures.